AMICI in pyPESTO

After this notebook you can…

…create a pyPESTO problem directly from an AMICI model or through PEtab.

…perform parameter estimation of your amici model and adjust advanced settings for this.

…evaluate an optimization through basic visualizations.

…inspect parameter uncertainties through profile likelihoods and MCMC sampling.

To run optimizations and/or uncertainty analysis, we turn to pyPESTO (Parameter EStimation TOolbox for python).

pyPESTO is a Python tool for parameter estimation. It provides an interface to the model simulation tool AMICI for the simulation of Ordinary Differential Equation (ODE) models specified in the SBML format. With it, we can optimize our model parameters given measurement data, we can do uncertainty analysis via profile likelihoods and/or through sampling methods. pyPESTO provides an interface to many optimizers, global and local, such as e.g. SciPy optimizers, Fides and Pyswarm. Additionally, it interfaces samplers such as pymc, emcee and some of its own samplers.

[1]:

# import

import logging

import random

import tempfile

from pprint import pprint

import amici

import matplotlib as mpl

import numpy as np

import petab

from IPython.display import Markdown, display

import pypesto.optimize as optimize

import pypesto.petab

import pypesto.profile as profile

import pypesto.sample as sample

import pypesto.store as store

import pypesto.visualize as visualize

import pypesto.visualize.model_fit as model_fit

mpl.rcParams["figure.dpi"] = 100

mpl.rcParams["font.size"] = 18

random.seed(1912)

# name of the model that will also be the name of the python module

model_name = "boehm_JProteomeRes2014"

# output directory

model_output_dir = "tmp/" + model_name

1. Create a pyPESTO problem

Create a pyPESTO objective from AMICI

Before we can use AMICI to simulate our model, the SBML model needs to be translated to C++ code. This is done by amici.SbmlImporter.

[2]:

sbml_file = f"./{model_name}/{model_name}.xml"

# Create an SbmlImporter instance for our SBML model

sbml_importer = amici.SbmlImporter(sbml_file)

In this example, we want to specify fixed parameters, observables and a \(\sigma\) parameter. Unfortunately, the latter two are not part of the SBML standard. However, they can be provided to amici.SbmlImporter.sbml2amici as demonstrated in the following.

Constant parameters

Constant parameters, i.e., parameters with respect to which no sensitivities are to be computed (these are often parameters specifying a certain experimental condition) are provided as a list of parameter names.

[3]:

constant_parameters = ["ratio", "specC17"]

Observables

We used SBML’s `AssignmentRule <https://sbml.org/software/libsbml/5.18.0/docs/formatted/python-api/classlibsbml_1_1_assignment_rule.html>`__ as a non-standard way to specify Model outputs within the SBML file. These rules need to be removed prior to the model import (AMICI does at this time not support these rules). This can be easily done using amici.assignmentRules2observables().

In this example, we introduced parameters named observable_* as targets of the observable AssignmentRules. Where applicable we have observable_*_sigma parameters for \(\sigma\) parameters (see below).

[4]:

# Retrieve model output names and formulae from AssignmentRules and remove the respective rules

observables = amici.assignmentRules2observables(

sbml_importer.sbml, # the libsbml model object

filter_function=lambda variable: variable.getId().startswith("observable_")

and not variable.getId().endswith("_sigma"),

)

print("Observables:")

pprint(observables)

Observables:

{'observable_pSTAT5A_rel': {'formula': '(100 * pApB + 200 * pApA * specC17) / '

'(pApB + STAT5A * specC17 + 2 * pApA * '

'specC17)',

'name': 'observable_pSTAT5A_rel'},

'observable_pSTAT5B_rel': {'formula': '-(100 * pApB - 200 * pBpB * (specC17 - '

'1)) / (STAT5B * (specC17 - 1) - pApB + '

'2 * pBpB * (specC17 - 1))',

'name': 'observable_pSTAT5B_rel'},

'observable_rSTAT5A_rel': {'formula': '(100 * pApB + 100 * STAT5A * specC17 + '

'200 * pApA * specC17) / (2 * pApB + '

'STAT5A * specC17 + 2 * pApA * specC17 '

'- STAT5B * (specC17 - 1) - 2 * pBpB * '

'(specC17 - 1))',

'name': 'observable_rSTAT5A_rel'}}

\(\sigma\) parameters

To specify measurement noise as a parameter, we simply provide a dictionary with observable names as keys and a list of (preexisting) parameter names as values to indicate which sigma parameter is to be used for which observable.

[5]:

sigma_vals = ["sd_pSTAT5A_rel", "sd_pSTAT5B_rel", "sd_rSTAT5A_rel"]

observable_names = observables.keys()

sigmas = dict(zip(list(observable_names), sigma_vals))

pprint(sigmas)

{'observable_pSTAT5A_rel': 'sd_pSTAT5A_rel',

'observable_pSTAT5B_rel': 'sd_pSTAT5B_rel',

'observable_rSTAT5A_rel': 'sd_rSTAT5A_rel'}

Generating the module

Now we can generate the python module for our model. amici.SbmlImporter.sbml2amici will symbolically derive the sensitivity equations, generate C++ code for model simulation, and assemble the python module.

[6]:

%%time

sbml_importer.sbml2amici(

model_name,

model_output_dir,

verbose=False,

observables=observables,

constant_parameters=constant_parameters,

sigmas=sigmas,

)

CPU times: user 1.97 s, sys: 32.3 ms, total: 2 s

Wall time: 22 s

Importing the module and loading the model

If everything went well, we are ready to load the newly generated model:

[7]:

model_module = amici.import_model_module(model_name, model_output_dir)

Afterwards, we can get an instance of our model from which we can retrieve information such as parameter names:

[8]:

model = model_module.getModel()

print("Model parameters:", list(model.getParameterIds()))

print("Model outputs: ", list(model.getObservableIds()))

print("Model states: ", list(model.getStateIds()))

Model parameters: ['Epo_degradation_BaF3', 'k_exp_hetero', 'k_exp_homo', 'k_imp_hetero', 'k_imp_homo', 'k_phos', 'sd_pSTAT5A_rel', 'sd_pSTAT5B_rel', 'sd_rSTAT5A_rel']

Model outputs: ['observable_pSTAT5A_rel', 'observable_pSTAT5B_rel', 'observable_rSTAT5A_rel']

Model states: ['STAT5A', 'STAT5B', 'pApB', 'pApA', 'pBpB', 'nucpApA', 'nucpApB', 'nucpBpB']

Running simulations and analyzing results

After importing the model, we can run simulations using amici.runAmiciSimulation. This requires a Model instance and a Solver instance. But, in order go gain a value of fit, we also need to provide some data.

[9]:

# we prepare our data as it is reported in the benchmark collection

# timepoints

timepoints = np.array(

[

0.0,

2.5,

5.0,

10.0,

15.0,

20.0,

30.0,

40.0,

50.0,

60.0,

80.0,

100.0,

120.0,

160.0,

200.0,

240.0,

]

)

# measurements

meas_pSTAT5A_rel = np.array(

[

7.901073,

66.363494,

81.171324,

94.730308,

95.116483,

91.441717,

91.257099,

93.672298,

88.754233,

85.269703,

81.132395,

76.135928,

65.248059,

42.599659,

25.157798,

15.430182,

]

)

meas_pSTAT5B_rel = np.array(

[

4.596533,

29.634546,

46.043806,

81.974734,

80.571609,

79.035720,

75.672380,

71.624720,

69.062863,

67.147384,

60.899476,

54.809258,

43.981290,

29.771458,

20.089017,

10.961845,

]

)

meas_rSTAT5A_rel = np.array(

[

14.723168,

33.762342,

36.799851,

49.717602,

46.928120,

47.836575,

46.928727,

40.597753,

43.783664,

44.457388,

41.327159,

41.062733,

39.235830,

36.619461,

34.893714,

32.211077,

]

)

[10]:

benchmark_parameters = np.array(

[

-1.568917588,

-4.999704894,

-2.209698782,

-1.786006548,

4.990114009,

4.197735488,

0.585755271,

0.818982819,

0.498684404,

]

)

# set timepoints for which we want to simulate the model

model.setTimepoints(timepoints)

# set fixed parameters for which we want to simulate the model

model.setFixedParameters(np.array([0.693, 0.107]))

# set parameters to optimal values found in the benchmark collection

model.setParameterScale(amici.ParameterScaling.log10)

model.setParameters(benchmark_parameters)

# Create solver instance

solver = model.getSolver()

# Run simulation using model parameters from the benchmark collection and default solver options

rdata = amici.runAmiciSimulation(model, solver)

[11]:

# Create edata instance with dimensions and timepoints

edata = amici.ExpData(

3, # number of observables

0, # number of event outputs

0, # maximum number of events

timepoints, # timepoints

)

# set observed data

edata.setObservedData(meas_pSTAT5A_rel, 0)

edata.setObservedData(meas_pSTAT5B_rel, 1)

edata.setObservedData(meas_rSTAT5A_rel, 2)

# set standard deviations to optimal values found in the benchmark collection

edata.setObservedDataStdDev(np.array(16 * [10**0.585755271]), 0)

edata.setObservedDataStdDev(np.array(16 * [10**0.818982819]), 1)

edata.setObservedDataStdDev(np.array(16 * [10**0.498684404]), 2)

[12]:

rdata = amici.runAmiciSimulation(model, solver, edata)

print("Chi2 value reported in benchmark collection: 47.9765479")

print(f"chi2 value using AMICI: {rdata['chi2']}")

Chi2 value reported in benchmark collection: 47.9765479

chi2 value using AMICI: 47.97654319310132

Creating pyPESTO objective

We are now set up to create our pyPESTO objective. This objective is a vital part of the pyPESTO infrastructure as it provides a blackbox interface to call any predefined objective function with some parameters and evaluate it. We can easily create an AmiciObjective by supplying the model, an amici solver and the data.

Keep in mind, however, that you can use ANY function you would like for this.

[13]:

# we make some more adjustments to our model and the solver

model.requireSensitivitiesForAllParameters()

solver.setSensitivityMethod(amici.SensitivityMethod.forward)

solver.setSensitivityOrder(amici.SensitivityOrder.first)

objective = pypesto.AmiciObjective(

amici_model=model, amici_solver=solver, edatas=[edata], max_sensi_order=1

)

We can now call the objective function directly for any parameter. The value that is put out is the likelihood function. If we want to interact more with the AMICI returns, we can also return this by call and e.g., retrieve the chi2 value.

[14]:

# the generic objective call

print(f"Objective value: {objective(benchmark_parameters)}")

# a call returning the AMICI data as well

obj_call_with_dict = objective(benchmark_parameters, return_dict=True)

print(

f'Chi^2 value of the same parameters: {obj_call_with_dict["rdatas"][0]["chi2"]}'

)

Objective value: 138.22199735603812

Chi^2 value of the same parameters: 47.97654319310132

Now this makes the whole process already somewhat easier, but still, getting here took us quite some coding and effort. This will only get more complicated, the more complex the model is. Therefore, in the next part, we will show you how to bypass the tedious lines of code by using PEtab.

Create a pyPESTO problem + objective from Petab

Background on PEtab

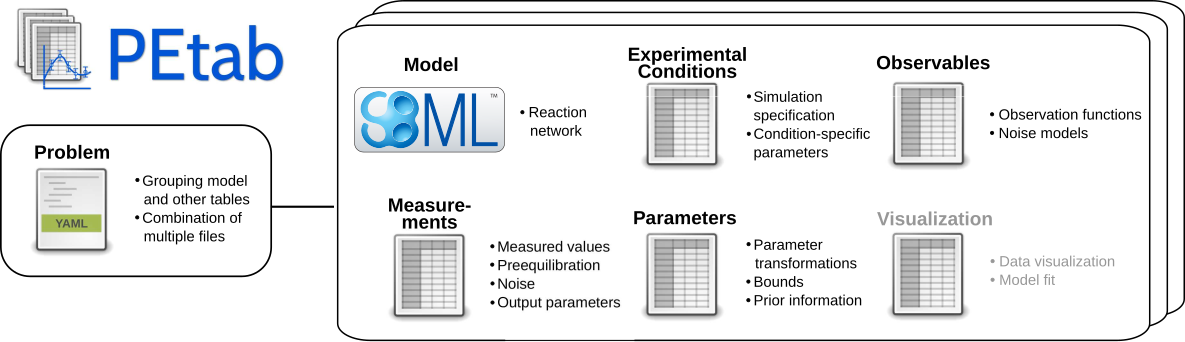

pyPESTO supports the PEtab standard. PEtab is a data format for specifying parameter estimation problems in systems biology.

A PEtab problem consist of an SBML file, defining the model topology and a set of .tsv files, defining experimental conditions, observables, measurements and parameters (and their optimization bounds, scale, priors…). All files that make up a PEtab problem can be structured in a .yaml file. The pypesto.Objective coming from a PEtab problem corresponds to the negative-log-likelihood/negative-log-posterior distribution of the parameters.

For more details on PEtab, the interested reader is referred to PEtab’s format definition, for examples the reader is referred to the PEtab benchmark collection. The Model from Böhm et al. JProteomRes 2014 is part of the benchmark collection and will be used as the running example throughout this notebook.

[15]:

%%capture

petab_yaml = f"./{model_name}/{model_name}.yaml"

petab_problem = petab.Problem.from_yaml(petab_yaml)

importer = pypesto.petab.PetabImporter(petab_problem)

problem = importer.create_problem(verbose=False)

Compiling amici model to folder /home/docs/checkouts/readthedocs.org/user_builds/pypesto/checkouts/stable/doc/example/amici_models/0.23.1/FullModel.

[16]:

# Check the dataframes. First the parameter dataframe

petab_problem.parameter_df.head()

[16]:

| parameterName | parameterScale | lowerBound | upperBound | nominalValue | estimate | |

|---|---|---|---|---|---|---|

| parameterId | ||||||

| Epo_degradation_BaF3 | EPO_{degradation,BaF3} | log10 | 0.00001 | 100000 | 0.026983 | 1 |

| k_exp_hetero | k_{exp,hetero} | log10 | 0.00001 | 100000 | 0.000010 | 1 |

| k_exp_homo | k_{exp,homo} | log10 | 0.00001 | 100000 | 0.006170 | 1 |

| k_imp_hetero | k_{imp,hetero} | log10 | 0.00001 | 100000 | 0.016368 | 1 |

| k_imp_homo | k_{imp,homo} | log10 | 0.00001 | 100000 | 97749.379402 | 1 |

[17]:

# Check the observable dataframe

petab_problem.observable_df.head()

[17]:

| observableName | observableFormula | noiseFormula | observableTransformation | noiseDistribution | |

|---|---|---|---|---|---|

| observableId | |||||

| pSTAT5A_rel | NaN | (100 * pApB + 200 * pApA * specC17) / (pApB + ... | noiseParameter1_pSTAT5A_rel | lin | normal |

| pSTAT5B_rel | NaN | -(100 * pApB - 200 * pBpB * (specC17 - 1)) / (... | noiseParameter1_pSTAT5B_rel | lin | normal |

| rSTAT5A_rel | NaN | (100 * pApB + 100 * STAT5A * specC17 + 200 * p... | noiseParameter1_rSTAT5A_rel | lin | normal |

[18]:

# Check the measurement dataframe

petab_problem.measurement_df.head()

[18]:

| observableId | preequilibrationConditionId | simulationConditionId | measurement | time | observableParameters | noiseParameters | datasetId | |

|---|---|---|---|---|---|---|---|---|

| 0 | pSTAT5A_rel | NaN | model1_data1 | 7.901073 | 0.0 | NaN | sd_pSTAT5A_rel | model1_data1_pSTAT5A_rel |

| 1 | pSTAT5A_rel | NaN | model1_data1 | 66.363494 | 2.5 | NaN | sd_pSTAT5A_rel | model1_data1_pSTAT5A_rel |

| 2 | pSTAT5A_rel | NaN | model1_data1 | 81.171324 | 5.0 | NaN | sd_pSTAT5A_rel | model1_data1_pSTAT5A_rel |

| 3 | pSTAT5A_rel | NaN | model1_data1 | 94.730308 | 10.0 | NaN | sd_pSTAT5A_rel | model1_data1_pSTAT5A_rel |

| 4 | pSTAT5A_rel | NaN | model1_data1 | 95.116483 | 15.0 | NaN | sd_pSTAT5A_rel | model1_data1_pSTAT5A_rel |

[19]:

# check the condition dataframe

petab_problem.condition_df.head()

[19]:

| conditionName | |

|---|---|

| conditionId | |

| model1_data1 | condition1 |

This was really straightforward. With this, we are still able to do all the same things we did before and also adjust solver setting, change the model, etc.

[20]:

# call the objective function

print(f"Objective value: {problem.objective(benchmark_parameters)}")

# change things in the model

problem.objective.amici_model.requireSensitivitiesForAllParameters()

# change solver settings

print(

f"Absolute tolerance before change: {problem.objective.amici_solver.getAbsoluteTolerance()}"

)

problem.objective.amici_solver.setAbsoluteTolerance(1e-15)

print(

f"Absolute tolerance after change: {problem.objective.amici_solver.getAbsoluteTolerance()}"

)

Objective value: 138.22199736863757

Absolute tolerance before change: 1e-16

Absolute tolerance after change: 1e-15

Now we are good to go and start the first optimization.

2. Optimization

Once setup, the optimization can be done very quickly with default settings. If needed, these settings can be highly individualized and change according to the needs of our model. In this section, we shall go over some of these settings.

Optimizer

The optimizer determines the algorithm with which we optimize our model. The main disjunction is between global and local optimizers.

pyPESTO provides an interface to many optimizers, such as Fides, ScipyOptimizers, Pyswarm and many more. For a whole list of supported optimizers with settings for each optimizer you can have a look here.

[21]:

optimizer_options = {"maxiter": 1e4, "fatol": 1e-12, "frtol": 1e-12}

optimizer = optimize.FidesOptimizer(

options=optimizer_options, verbose=logging.WARN

)

Startpoint method

The startpoint method describes how you want to choose your startpoints, in case you do a multistart optimization. The default here is uniform meaning that each startpoint is a uniform sample from the allowed parameter space. The other two notable options are either latin_hypercube or a self defined function.

[22]:

startpoint_method = pypesto.startpoint.uniform

History options

In some cases, it is good to trace what the optimizer did in each step, i.e., the history. There is a multitude of options on what to report here, but the most important one is trace_record which turns the history function on and off.

[23]:

# save optimizer trace

history_options = pypesto.HistoryOptions(trace_record=True)

Optimization options

Some further possible options for the optimization. Notably allow_failed_starts, which in case of a very complicated objective function, can help get to the desired number of optimizations when turned off. As we do not need this here, we create the default options.

[24]:

opt_options = optimize.OptimizeOptions()

opt_options

[24]:

{'allow_failed_starts': True,

'report_sres': True,

'report_hess': True,

'history_beats_optimizer': True}

Running the optimization

We now only need to decide on the number of starts as well as the engine we want to use for the optimization.

[25]:

n_starts = 20 # usually a value >= 100 should be used

engine = pypesto.engine.MultiProcessEngine()

Engine will use up to 2 processes (= CPU count).

[26]:

%%time

# Set seed for reproducibility

np.random.seed(1)

result = optimize.minimize(

problem=problem,

optimizer=optimizer,

n_starts=n_starts,

startpoint_method=startpoint_method,

engine=engine,

options=opt_options,

)

<timed exec>:3: DeprecationWarning: Passing `startpoint_method` directly is deprecated, use `problem.startpoint_method` instead.

2024-04-15 14:19:49 fides(WARNING) Stopping as trust region radius 1.68E-16 is smaller than machine precision.

2024-04-15 14:19:49.346 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 35.5904 and h = 3.90966e-06, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:19:49.348 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 35.5904: AMICI failed to integrate the forward problem

2024-04-15 14:19:49.644 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 123.941 and h = 3.28923e-06, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:19:49.645 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 123.941: AMICI failed to integrate the forward problem

2024-04-15 14:19:50.251 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 82.1684 and h = 1.31298e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:19:50.253 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 82.1684: AMICI failed to integrate the forward problem

2024-04-15 14:19:51 fides(WARNING) Stopping as trust region radius 1.18E-16 is smaller than machine precision.

2024-04-15 14:19:51 fides(WARNING) Stopping as trust region radius 2.03E-16 is smaller than machine precision.

2024-04-15 14:19:54 fides(WARNING) Stopping as trust region radius 1.29E-16 is smaller than machine precision.

2024-04-15 14:19:54.618 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 145.763 and h = 3.35249e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:19:54.620 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 145.763: AMICI failed to integrate the forward problem

2024-04-15 14:19:55 fides(WARNING) Stopping as trust region radius 7.28E-17 is smaller than machine precision.

2024-04-15 14:19:55.900 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 145.402 and h = 4.37689e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:19:55.905 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 145.402: AMICI failed to integrate the forward problem

2024-04-15 14:19:56 fides(WARNING) Stopping as trust region radius 9.67E-17 is smaller than machine precision.

2024-04-15 14:19:59 fides(WARNING) Stopping as trust region radius 1.82E-16 is smaller than machine precision.

2024-04-15 14:20:01 fides(WARNING) Stopping as trust region radius 1.14E-16 is smaller than machine precision.

2024-04-15 14:20:04.359 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 88.9992 and h = 1.33888e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:04.363 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 88.9992: AMICI failed to integrate the forward problem

2024-04-15 14:20:04.675 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 89.1545 and h = 6.0377e-06, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:04.680 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 89.1545: AMICI failed to integrate the forward problem

2024-04-15 14:20:04 fides(WARNING) Stopping as trust region radius 5.92E-17 is smaller than machine precision.

2024-04-15 14:20:07.385 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 145.497 and h = 3.04713e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:07.386 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 145.497: AMICI failed to integrate the forward problem

2024-04-15 14:20:07 fides(WARNING) Stopping as trust region radius 1.22E-16 is smaller than machine precision.

2024-04-15 14:20:07 fides(WARNING) Stopping as trust region radius 7.57E-17 is smaller than machine precision.

2024-04-15 14:20:08.752 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 144.824 and h = 2.37436e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:08.757 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 144.824: AMICI failed to integrate the forward problem

2024-04-15 14:20:09.612 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 145.845 and h = 2.68581e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:09.616 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 145.845: AMICI failed to integrate the forward problem

2024-04-15 14:20:10.360 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 88.6285 and h = 2.81085e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:10.364 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 88.6285: AMICI failed to integrate the forward problem

2024-04-15 14:20:10 fides(WARNING) Stopping as trust region radius 2.22E-16 is smaller than machine precision.

2024-04-15 14:20:12.454 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 168.825 and h = 5.42255e-06, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:12.458 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 168.825: AMICI failed to integrate the forward problem

2024-04-15 14:20:13 fides(WARNING) Stopping as trust region radius 1.28E-16 is smaller than machine precision.

2024-04-15 14:20:14.958 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 89.4224 and h = 2.79644e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:14.962 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 89.4224: AMICI failed to integrate the forward problem

2024-04-15 14:20:15 fides(WARNING) Stopping as trust region radius 1.11E-16 is smaller than machine precision.

2024-04-15 14:20:18 fides(WARNING) Stopping as trust region radius 1.37E-16 is smaller than machine precision.

2024-04-15 14:20:20 fides(WARNING) Stopping as trust region radius 2.21E-16 is smaller than machine precision.

2024-04-15 14:20:22.230 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 145.199 and h = 2.58705e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:22.235 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 145.199: AMICI failed to integrate the forward problem

2024-04-15 14:20:22.812 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 145.288 and h = 4.1482e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:22.816 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 145.288: AMICI failed to integrate the forward problem

2024-04-15 14:20:23.344 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 87.8648 and h = 3.35146e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:23.348 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 87.8648: AMICI failed to integrate the forward problem

2024-04-15 14:20:23.646 - amici.swig_wrappers - DEBUG - [model1_data1][CVODES:CVode:ERR_FAILURE] AMICI ERROR: in module CVODES in function CVode : At t = 145.576 and h = 3.23036e-05, the error test failed repeatedly or with |h| = hmin.

2024-04-15 14:20:23.650 - amici.swig_wrappers - ERROR - [model1_data1][FORWARD_FAILURE] AMICI forward simulation failed at t = 145.576: AMICI failed to integrate the forward problem

2024-04-15 14:20:23 fides(WARNING) Stopping as trust region radius 5.67E-17 is smaller than machine precision.

2024-04-15 14:20:24 fides(WARNING) Stopping as trust region radius 2.22E-16 is smaller than machine precision.

2024-04-15 14:20:26 fides(WARNING) Stopping as trust region radius 1.15E-16 is smaller than machine precision.

CPU times: user 167 ms, sys: 42.6 ms, total: 210 ms

Wall time: 39.9 s

Now as a first step after the optimization, we can take a look at the summary of the optimizer:

[27]:

display(Markdown(result.summary()))

Optimization Result

number of starts: 20

best value: 138.22201757830067, id=12

worst value: 249.74599744333565, id=4

number of non-finite values: 0

execution time summary:

Mean execution time: 3.826s

Maximum execution time: 10.551s, id=12

Minimum execution time: 0.990s, id=4

summary of optimizer messages:

Count

Message

19

Trust Region Radius too small to proceed

1

Converged according to fval difference

best value found (approximately) 2 time(s)

number of plateaus found: 4

A summary of the best run:

Optimizer Result

optimizer used: <FidesOptimizer hessian_update=default verbose=30 options={‘maxiter’: 10000.0, ‘fatol’: 1e-12, ‘frtol’: 1e-12}>

message: Trust Region Radius too small to proceed

number of evaluations: 259

time taken to optimize: 10.551s

startpoint: [ 1.90896918 4.9732285 -3.27659492 -3.6286425 4.32595463 1.96818161 -4.33999827 2.55463053 2.53876188]

endpoint: [-1.56907787 -4.99921005 -2.20985065 -1.78589495 4.999966 4.19770875 0.58567784 0.81883956 0.49858743]

final objective value: 138.22201757830067

final gradient value: [-8.48144617e-04 5.54610163e-02 -1.08840893e-04 1.75929570e-03 -4.41674387e-05 8.35235697e-05 -1.26609394e-04 7.92584699e-05 7.08049645e-05]

final hessian value: [[ 2.11220199e+03 5.89957331e-01 1.07062735e+02 2.81543237e+03 8.81145472e-06 -7.86472961e+02 1.02539839e+02 -8.02202602e+01 -2.23176257e+01] [ 5.89957331e-01 1.92120978e-03 -1.73093318e-01 7.13397058e-01 -3.61906125e-08 -3.21040034e-01 -1.48968937e-02 -5.69277762e-02 -5.58790395e-02] [ 1.07062735e+02 -1.73093318e-01 7.00024728e+01 1.61456877e+02 7.00638777e-06 -8.83637576e+01 -3.50178590e+00 -3.02861028e+00 6.53064680e+00] [ 2.81543237e+03 7.13397058e-01 1.61456877e+02 3.76259260e+03 8.30380596e-06 -1.04210071e+03 1.32836557e+02 -1.05777532e+02 -2.70630763e+01] [ 8.81145472e-06 -3.61906125e-08 7.00638777e-06 8.30380596e-06 2.73914713e-10 -2.20046238e-04 4.21568121e-05 5.31627059e-05 6.37976793e-06] [-7.86472961e+02 -3.21040034e-01 -8.83637576e+01 -1.04210071e+03 -2.20046238e-04 9.30449003e+02 -8.20815542e+01 4.93154725e+01 3.27658894e+01] [ 1.02539839e+02 -1.48968937e-02 -3.50178590e+00 1.32836557e+02 4.21568121e-05 -8.20815542e+01 8.48306613e+01 0.00000000e+00 0.00000000e+00] [-8.02202602e+01 -5.69277762e-02 -3.02861028e+00 -1.05777532e+02 5.31627059e-05 4.93154725e+01 0.00000000e+00 8.48301873e+01 0.00000000e+00] [-2.23176257e+01 -5.58790395e-02 6.53064680e+00 -2.70630763e+01 6.37976793e-06 3.27658894e+01 0.00000000e+00 0.00000000e+00 8.48302067e+01]]

We can see some informative statistics, such as the mean execution time, best and worst values, a small table on the exit messages of the optimizer as well as detailed info on the best optimizer.

As our best start is just as good as the reported benchmark value, we shall now further inspect the result thorough some useful visualisations.

3. Optimization visualization

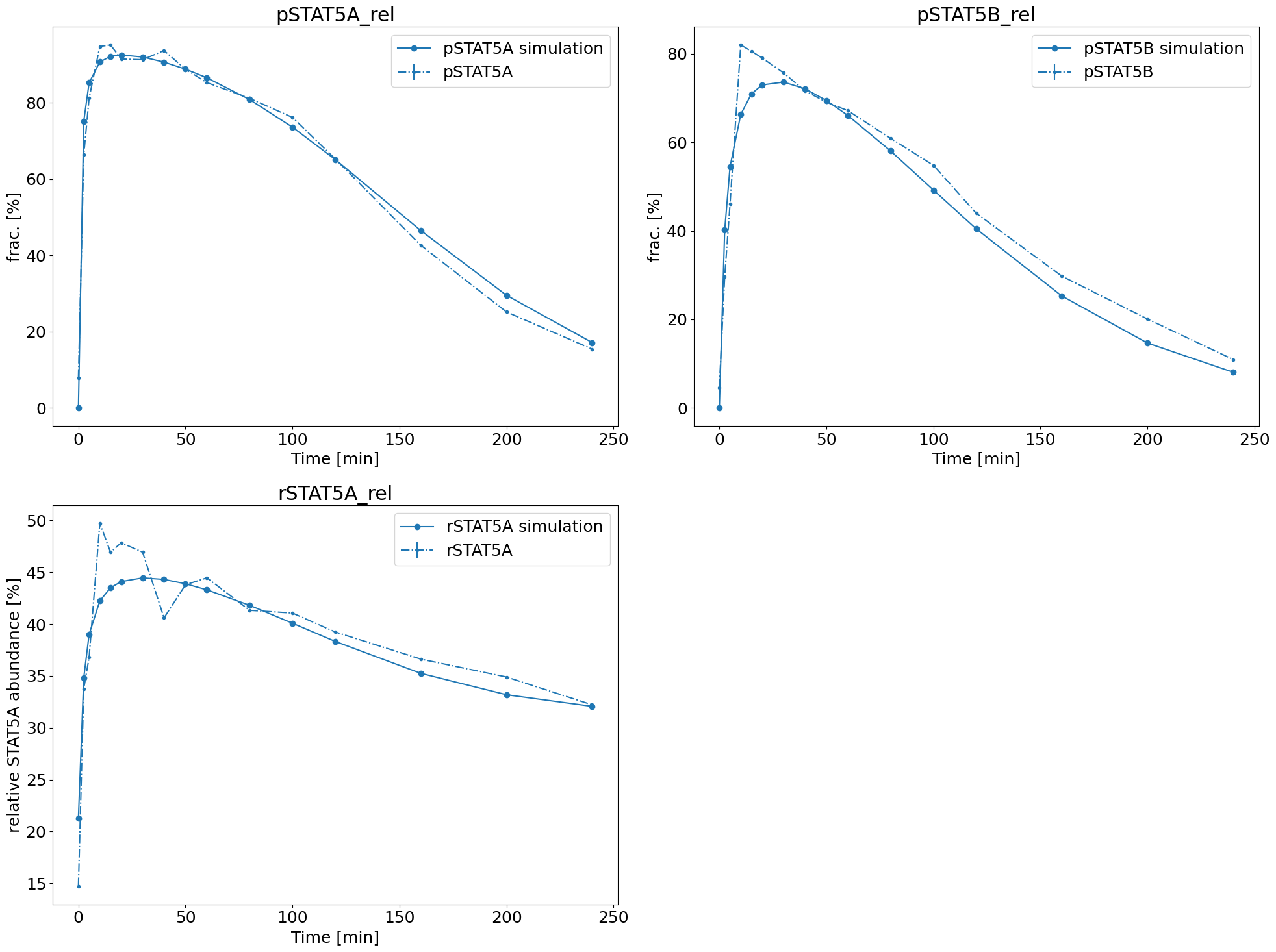

Model fit

Probably the most useful visualization there is, is one, where we visualize the found parameter dynamics against the measurements. This way we can see whether the fit is qualitatively and/or quantitatively good.

[28]:

ax = model_fit.visualize_optimized_model_fit(

petab_problem=petab_problem, result=result, pypesto_problem=problem

)

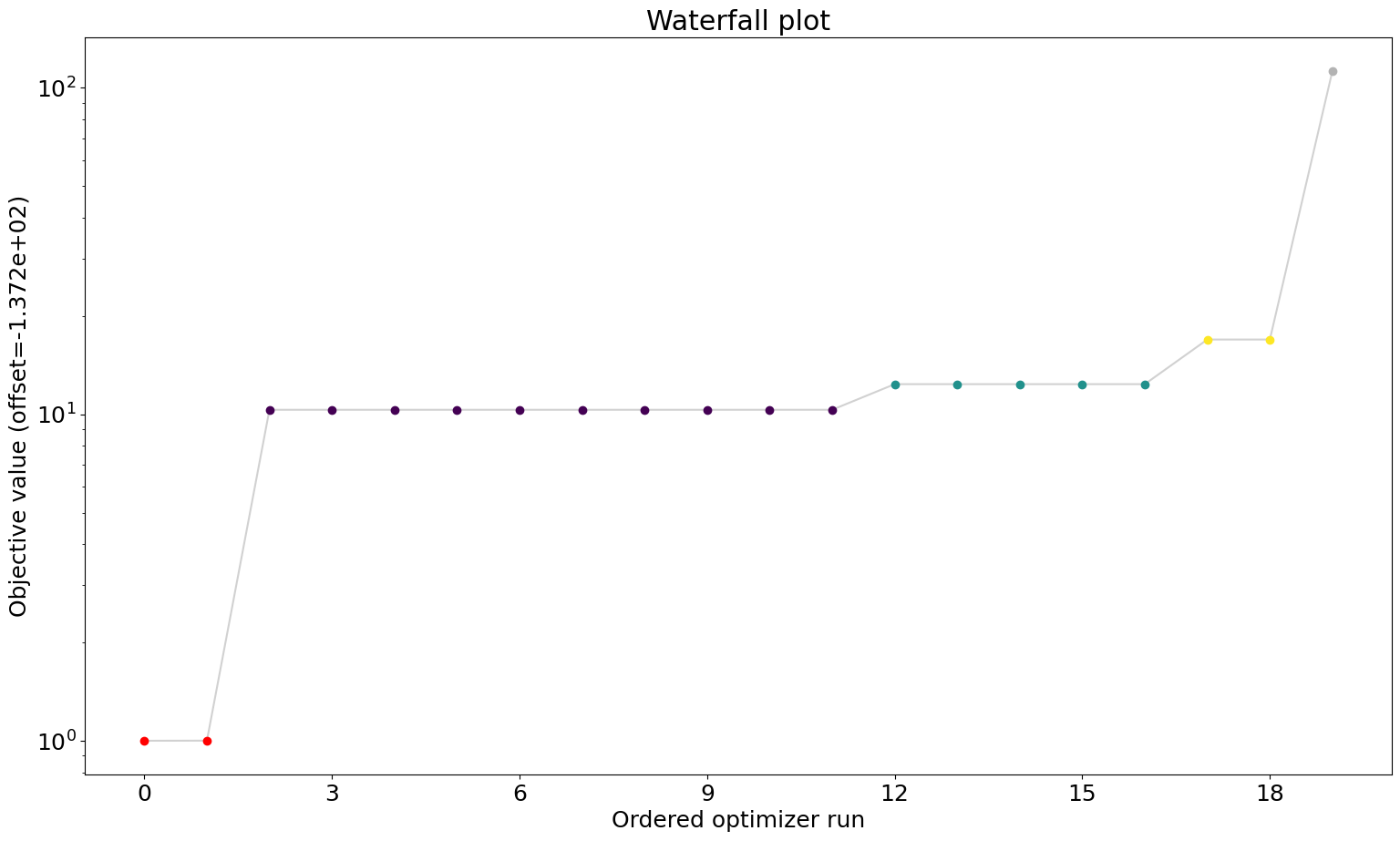

Waterfall plot

The waterfall plot is a visualization of the final objective function values of each start. They are sorted from small to high and then plotted. Similar values will get clustered and get the same color.

This helps to determine whether the result is reproducible and whether we reliably found a local minimum that we hope to be the global one.

[29]:

visualize.waterfall(result);

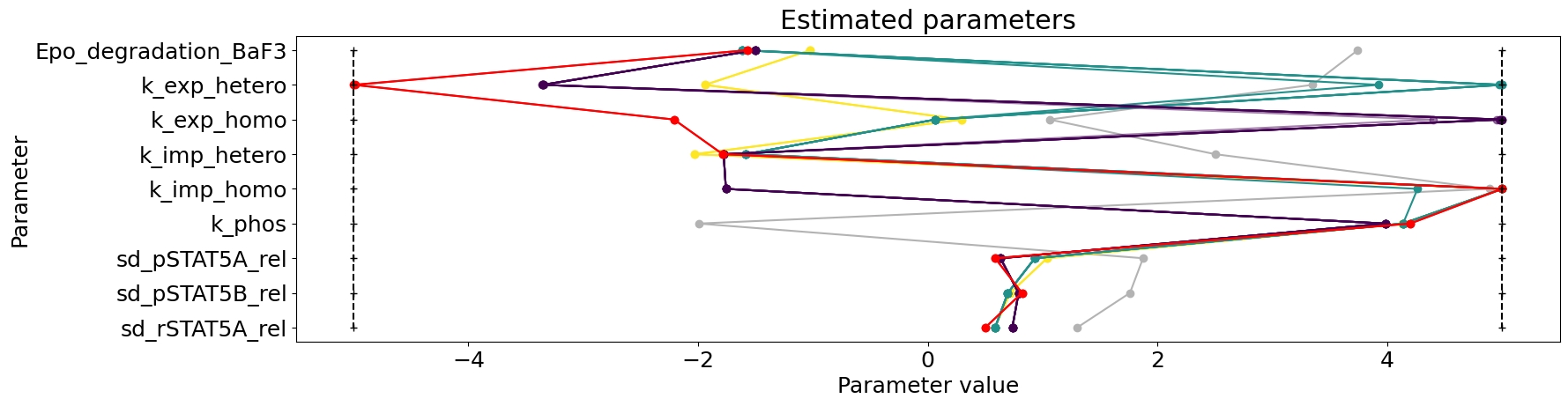

Parameter plots

To visualize the parameters, there is a multitude of options:

Parameter overview

Here we plot the parameters of all starts within their bounds. This can tell us whether some bounds are always hit and might need to be questioned and if the best starts are similar or differ amongst themselves, hinting already for some non-identifiabilities.

[30]:

visualize.parameters(result);

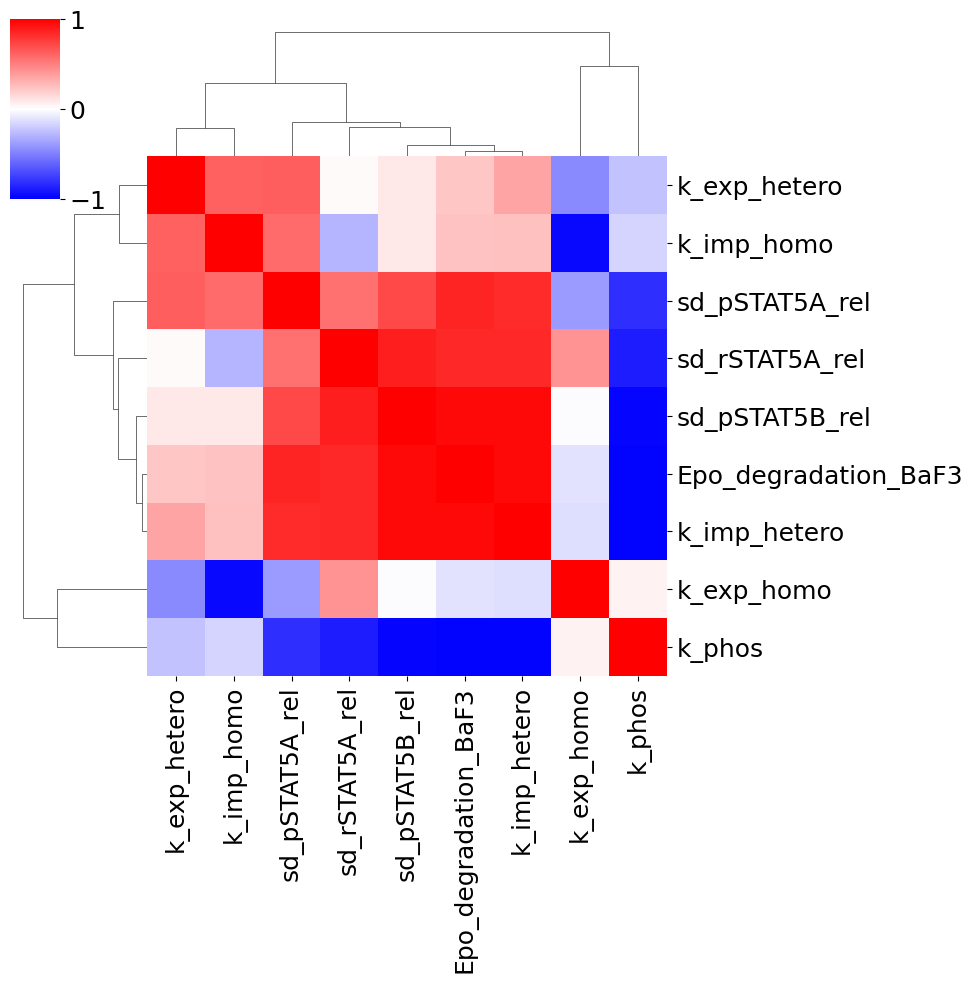

Parameter correlation plot

To further look into possible uncertainties, we can plot the correlation of the final points. Sometimes, pairs of parameters are dependent on each other and fixing one might solve some non-identifiability.

[31]:

visualize.parameters_correlation_matrix(result);

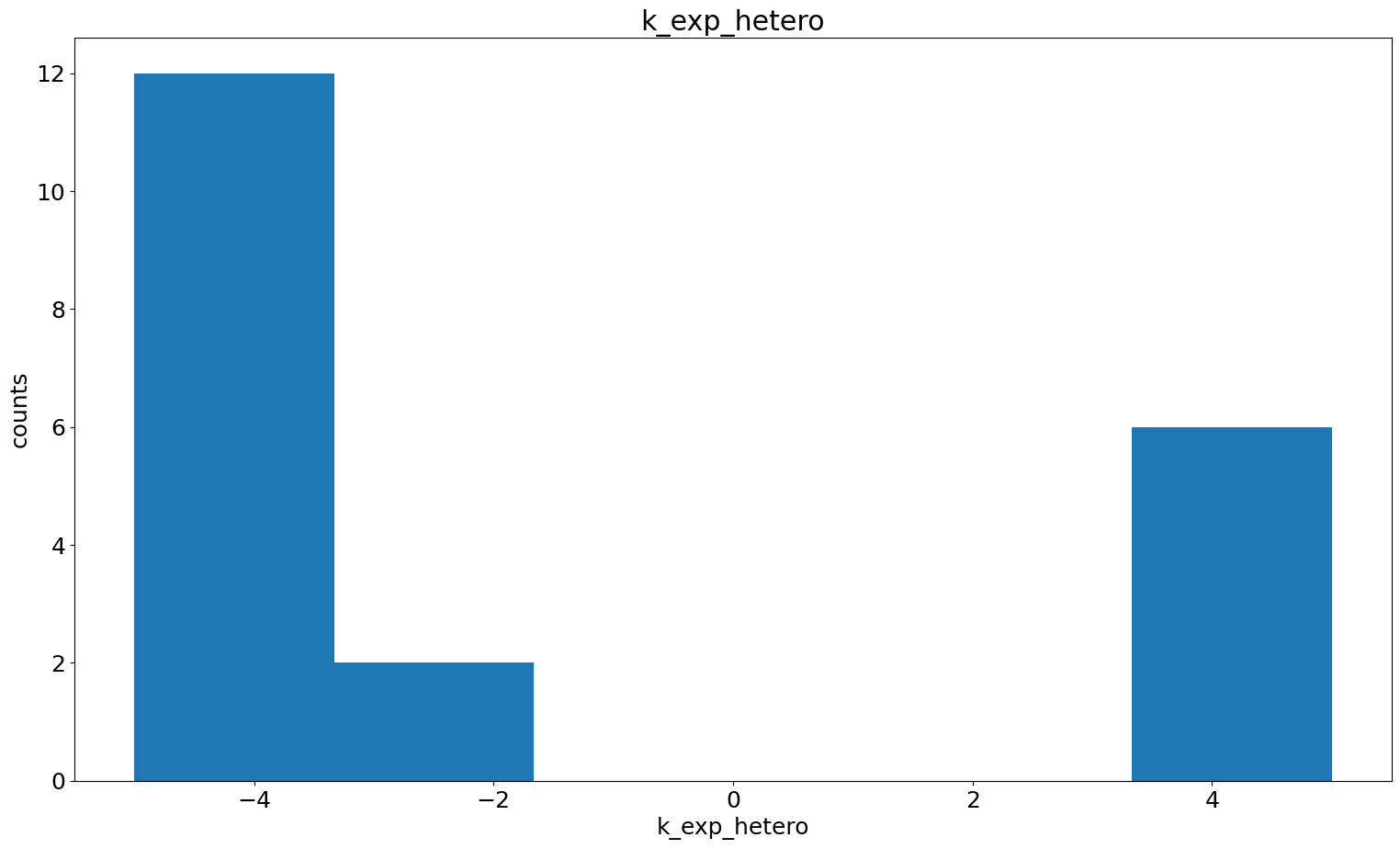

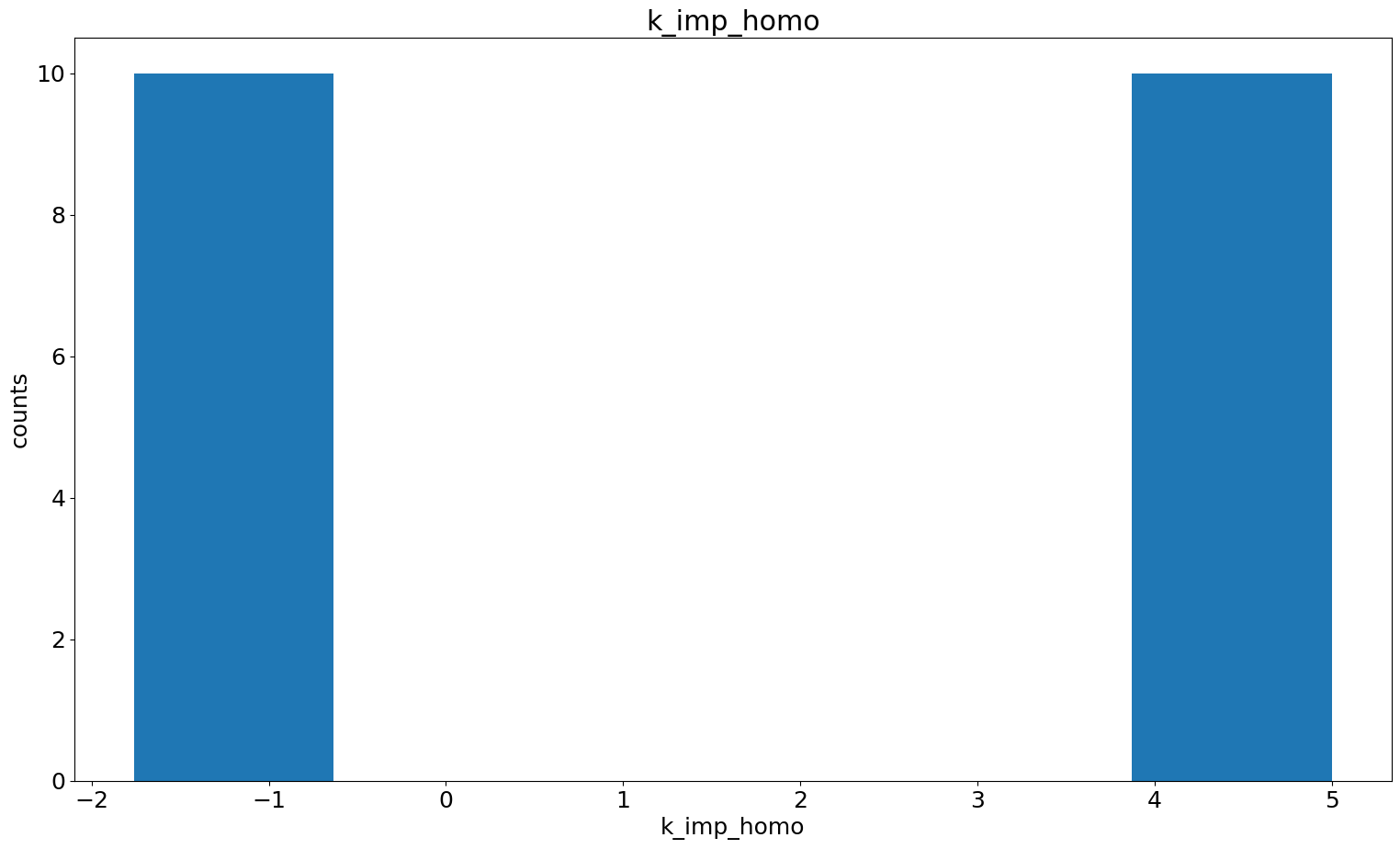

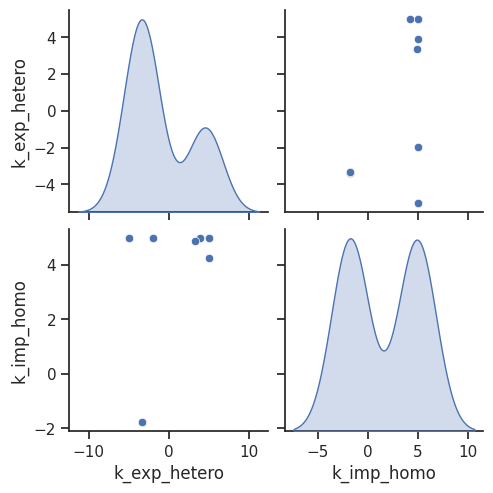

Parameter histogram + scatter

In case we found some dependencies and for further investigation, we can also specifically look at the histograms of certain parameters and the pairwise parameter scatter plot.

[32]:

visualize.parameter_hist(result=result, parameter_name="k_exp_hetero")

visualize.parameter_hist(result=result, parameter_name="k_imp_homo");

[33]:

visualize.optimization_scatter(result, parameter_indices=[1, 4]);

We definitely need to look further into it, and thus we turn to uncertainty quantification in the next section.

4. Uncertainty quantification

This mainly consists of two parts: * Profile Likelihoods * MCMC sampling

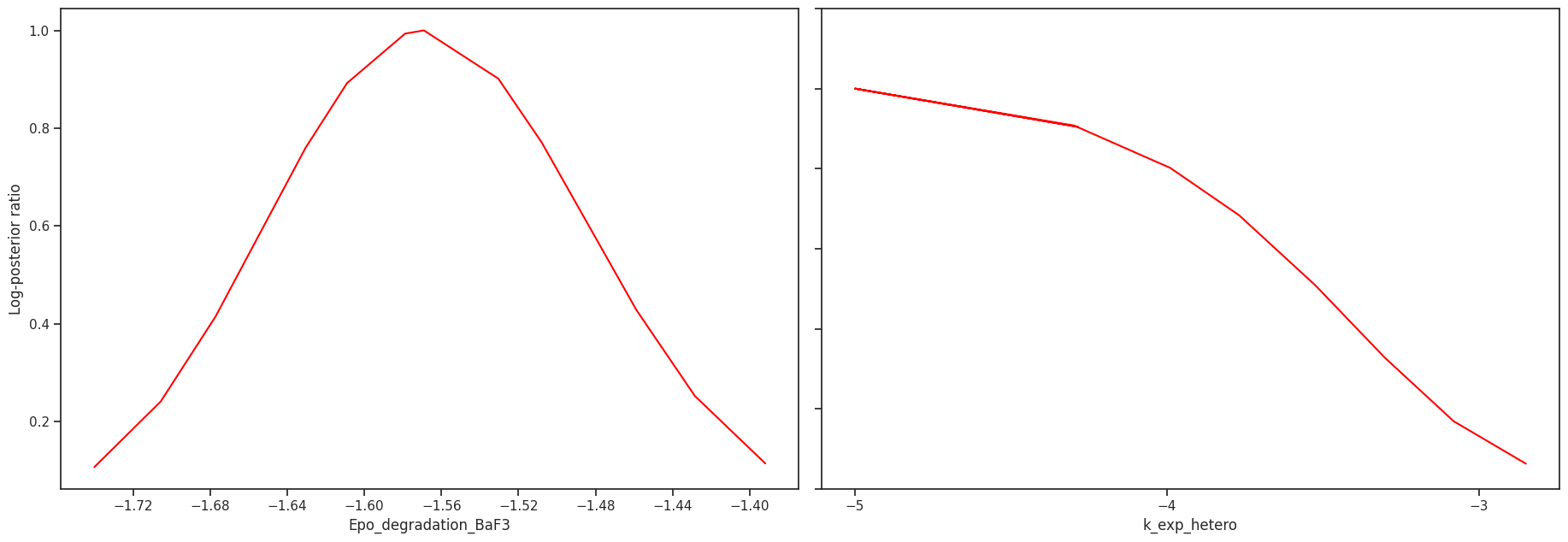

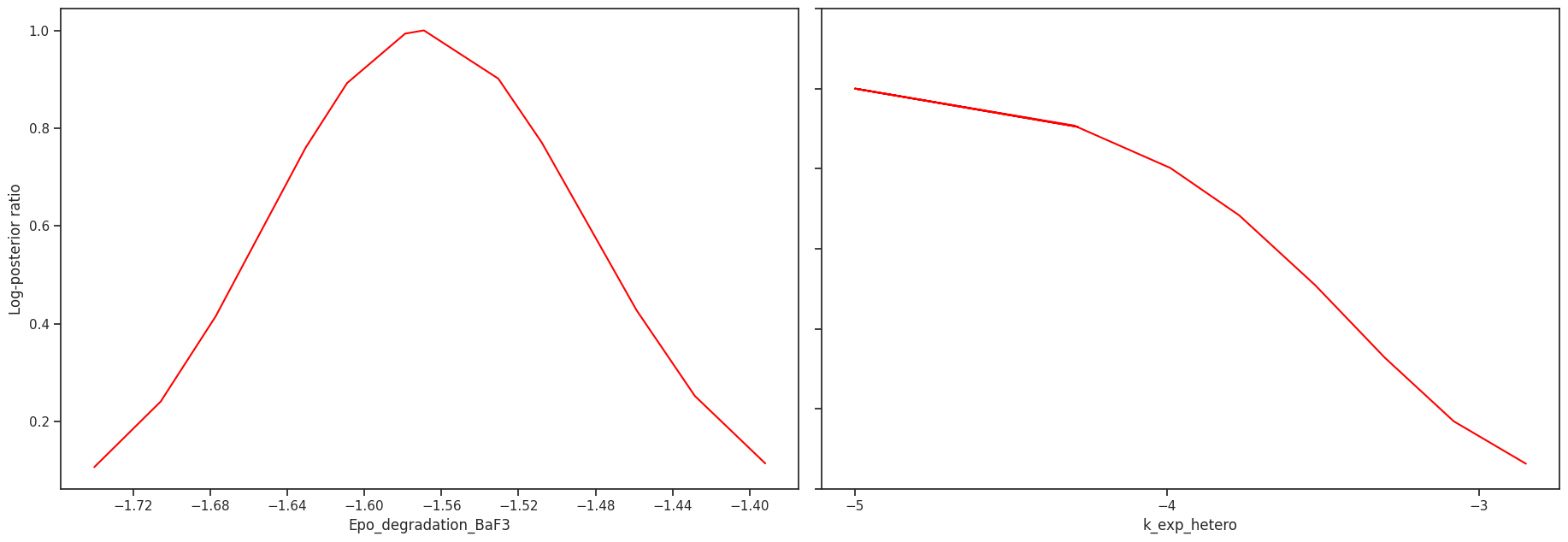

Profile likelihood

The profile likelihood uses an optimization scheme to calculate the confidence intervals for each parameter. We start with the best found parameter set of the optimization. Then in each step, we increase/decrease the parameter of interest, fix it and then run one local optimization. We do this until we either hit the bounds or reach a sufficiently bad fit.

To run the profiling, we do not need a lot of setup, as we did this already for the optimization.

[34]:

%%time

result = profile.parameter_profile(

problem=problem,

result=result,

optimizer=optimizer,

engine=engine,

profile_index=[0, 1],

)

2024-04-15 14:20:32 fides(WARNING) Stopping as trust region radius 1.01E-16 is smaller than machine precision.

2024-04-15 14:20:32 fides(WARNING) Stopping as trust region radius 2.05E-16 is smaller than machine precision.

2024-04-15 14:20:34 fides(WARNING) Stopping as trust region radius 1.29E-16 is smaller than machine precision.

2024-04-15 14:20:35 fides(WARNING) Stopping as trust region radius 8.39E-17 is smaller than machine precision.

2024-04-15 14:20:35 fides(WARNING) Stopping as trust region radius 1.52E-16 is smaller than machine precision.

2024-04-15 14:20:37 fides(WARNING) Stopping as trust region radius 8.44E-17 is smaller than machine precision.

2024-04-15 14:20:38 fides(WARNING) Stopping as trust region radius 1.60E-16 is smaller than machine precision.

2024-04-15 14:20:38 fides(WARNING) Stopping as trust region radius 8.09E-17 is smaller than machine precision.

2024-04-15 14:20:39 fides(WARNING) Stopping as trust region radius 1.08E-16 is smaller than machine precision.

2024-04-15 14:20:40 fides(WARNING) Stopping as trust region radius 1.49E-16 is smaller than machine precision.

2024-04-15 14:20:41 fides(WARNING) Stopping as trust region radius 1.86E-16 is smaller than machine precision.

2024-04-15 14:20:43 fides(WARNING) Stopping as trust region radius 8.70E-17 is smaller than machine precision.

2024-04-15 14:20:43 fides(WARNING) Stopping as trust region radius 7.08E-17 is smaller than machine precision.

2024-04-15 14:20:44 fides(WARNING) Stopping as trust region radius 7.67E-17 is smaller than machine precision.

2024-04-15 14:20:46 fides(WARNING) Stopping as trust region radius 1.88E-16 is smaller than machine precision.

2024-04-15 14:20:47 fides(WARNING) Stopping as trust region radius 1.11E-16 is smaller than machine precision.

2024-04-15 14:20:47 fides(WARNING) Stopping as trust region radius 2.05E-16 is smaller than machine precision.

2024-04-15 14:20:49 fides(WARNING) Stopping as trust region radius 7.95E-17 is smaller than machine precision.

2024-04-15 14:20:49 fides(WARNING) Stopping as trust region radius 1.22E-16 is smaller than machine precision.

2024-04-15 14:20:51 fides(WARNING) Stopping as trust region radius 1.97E-16 is smaller than machine precision.

2024-04-15 14:20:52 fides(WARNING) Stopping as trust region radius 1.94E-16 is smaller than machine precision.

2024-04-15 14:20:53 fides(WARNING) Stopping as trust region radius 1.03E-16 is smaller than machine precision.

2024-04-15 14:20:54 fides(WARNING) Stopping as trust region radius 2.22E-16 is smaller than machine precision.

CPU times: user 39.8 ms, sys: 33.6 ms, total: 73.3 ms

Wall time: 23.8 s

We can visualize the profiles directly

[35]:

# plot profiles

pypesto.visualize.profiles(result);

Sampling

We can use MCMC sampling to get a distribution on the posterior of the parameters. Here again, we do not need a lot of setup. We only need to define a sampler, of which pyPESTO offers a multitude.

[36]:

# Sampling

sampler = sample.AdaptiveMetropolisSampler()

result = sample.sample(

problem=problem,

sampler=sampler,

n_samples=5000,

result=result,

)

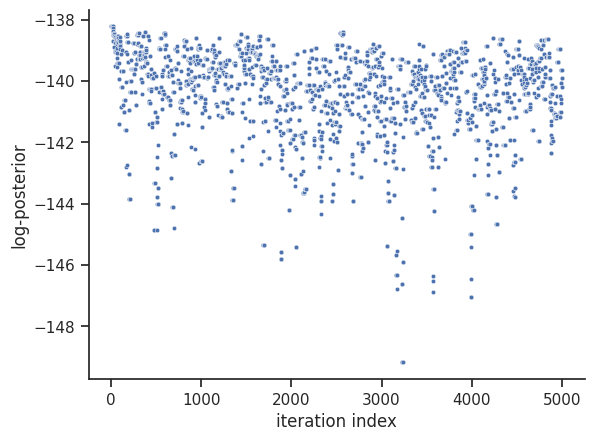

Elapsed time: 16.402129494

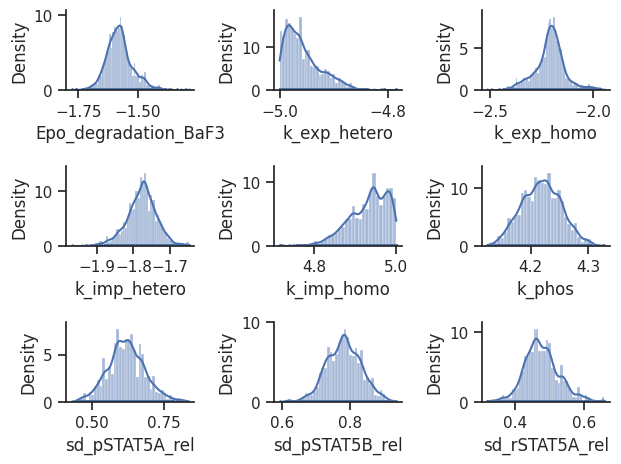

For visualization purposes, we can visualize the trace of the objective function value, as well as a scatter plot of the parameters, just like in the optimization. We do omit the scatter plot here, as it has a very large size.

[37]:

# plot objective function trace

visualize.sampling_fval_traces(result);

/home/docs/checkouts/readthedocs.org/user_builds/pypesto/envs/stable/lib/python3.11/site-packages/pypesto/visualize/sampling.py:79: UserWarning: Burn in index not found in the results, the full chain will be shown.

You may want to use, e.g., `pypesto.sample.geweke_test`.

_, params_fval, _, _, _ = get_data_to_plot(

[38]:

visualize.sampling_1d_marginals(result);

/home/docs/checkouts/readthedocs.org/user_builds/pypesto/envs/stable/lib/python3.11/site-packages/pypesto/visualize/sampling.py:1293: UserWarning: Burn in index not found in the results, the full chain will be shown.

You may want to use, e.g., `pypesto.sample.geweke_test`.

nr_params, params_fval, theta_lb, theta_ub, param_names = get_data_to_plot(

5. Saving results

Lastly, the whole process took quite some time, but is not necessarily finished. It is therefore very useful, to be able to save the result as is. pyPESTO uses the HDF5 format, and with two very short commands we are able to read and write a result from and to an HDF5 file.

Save result object in HDF5 File

[39]:

# create temporary file

fn = tempfile.NamedTemporaryFile(suffix=".hdf5", delete=False)

# write result with write_result function.

# Choose which parts of the result object to save with

# corresponding booleans.

store.write_result(

result=result,

filename=fn.name,

problem=True,

optimize=True,

sample=True,

profile=True,

)

Reload results

[40]:

# Read result

result2 = store.read_result(fn, problem=True)

# close file

fn.close()

/home/docs/checkouts/readthedocs.org/user_builds/pypesto/envs/stable/lib/python3.11/site-packages/pypesto/store/read_from_hdf5.py:304: UserWarning: You are loading a problem. This problem is not to be used without a separately created objective.

result.problem = pypesto_problem_reader.read()

As the warning already suggests, we need to assign the problem again correctly.

[41]:

result2.problem = problem

Now we are able to quickly load the results and visualize them.

Plot (reloaded) results

[42]:

# plot profiles

pypesto.visualize.profiles(result2);