MCMC sampling diagnostics¶

In this notebook, we illustrate how to assess the quality of your MCMC samples, e.g. convergence and auto-correlation, in pyPESTO.

The pipeline¶

First, we load the model and data to generate the MCMC samples from. In this example we show a toy example of a conversion reaction, loaded as a PEtab problem.

[1]:

import pypesto

import pypesto.petab

import pypesto.optimize as optimize

import pypesto.sample as sample

import pypesto.visualize as visualize

import petab

import numpy as np

import logging

import matplotlib.pyplot as plt

# log diagnostics

logger = logging.getLogger("pypesto.sample.diagnostics")

logger.setLevel(logging.INFO)

logger.addHandler(logging.StreamHandler())

# import to petab

petab_problem = petab.Problem.from_yaml(

"conversion_reaction/conversion_reaction.yaml")

# import to pypesto

importer = pypesto.petab.PetabImporter(petab_problem)

# create problem

problem = importer.create_problem()

Create the sampler object, in this case we will use adaptive parallel tempering with 3 temperatures.

[2]:

sampler = sample.AdaptiveParallelTemperingSampler(

internal_sampler=sample.AdaptiveMetropolisSampler(),

n_chains=3)

First, we will initiate the MCMC chain at a “random” point in parameter space, e.g. \(\theta_{start} = [3, -4]\)

[3]:

result = sample.sample(problem, n_samples=10000, sampler=sampler, x0=np.array([3,-4]))

elapsed_time = result.sample_result.time

print(f'Elapsed time: {round(elapsed_time,2)}')

100%|██████████| 10000/10000 [00:24<00:00, 401.71it/s]

Elapsed time: 25.04

[4]:

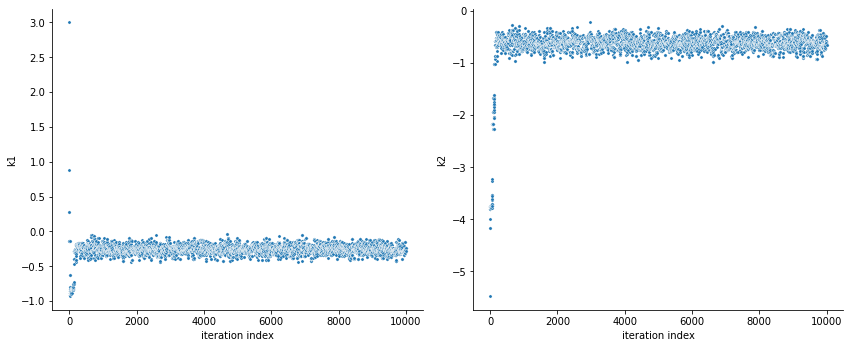

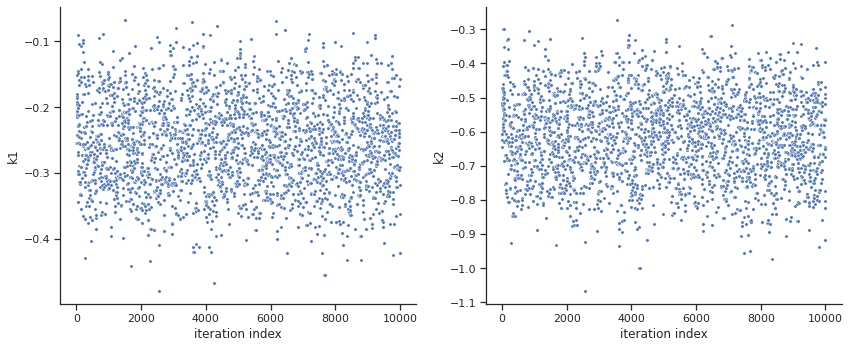

ax = visualize.sampling_parameters_trace(result, use_problem_bounds=False, size=(12,5))

Burn in index not found in the results, the full chain will be shown.

You may want to use, e.g., 'pypesto.sample.geweke_test'.

By visualizing the chains, we can see a warm up phase occurring until convergence of the chain is reached. This is commonly known as “burn in” phase and should be discarded. An automatic way to evaluate and find the index of the chain in which the warm up is finished can be done by using the Geweke test.

[5]:

sample.geweke_test(result=result)

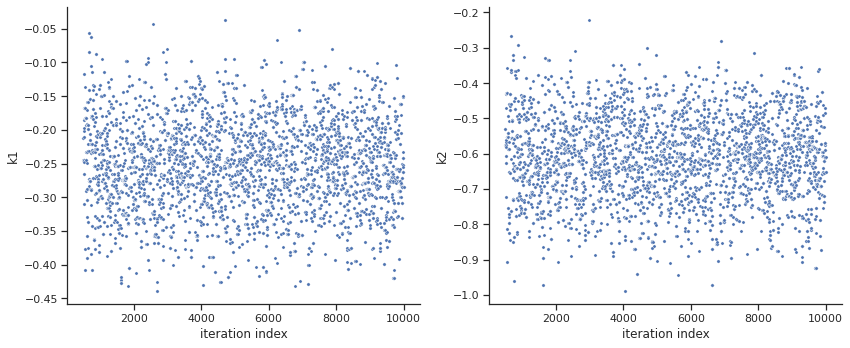

ax = visualize.sampling_parameters_trace(result, use_problem_bounds=False, size=(12,5))

Geweke burn-in index: 500

[6]:

ax = visualize.sampling_parameters_trace(result, use_problem_bounds=False, full_trace=True, size=(12,5))

Calculate the effective sample size per computation time. We save the results in a variable as we will compare them later.

[7]:

sample.effective_sample_size(result=result)

ess = result.sample_result.effective_sample_size

print(f'Effective sample size per computation time: {round(ess/elapsed_time,2)}')

Estimated chain autocorrelation: 8.44989705167994

Estimated effective sample size: 1005.4077783112965

Effective sample size per computation time: 40.15

Commonly, as a first step, optimization is performed, in order to find good parameter point estimates.

[8]:

res = optimize.minimize(problem, n_starts=10)

By passing the result object to the function, the previously found global optimum is used as starting point for the MCMC sampling.

[9]:

res = sample.sample(problem, n_samples=10000, sampler=sampler, result=res)

elapsed_time = res.sample_result.time

print('Elapsed time: '+str(round(elapsed_time,2)))

100%|██████████| 10000/10000 [00:24<00:00, 400.95it/s]

Elapsed time: 25.12

When the sampling is finished, we can analyse our results. pyPESTO provides functions to analyse both the sampling process as well as the obtained sampling result. Visualizing the traces e.g. allows to detect burn-in phases, or fine-tune hyperparameters. First, the parameter trajectories can be visualized:

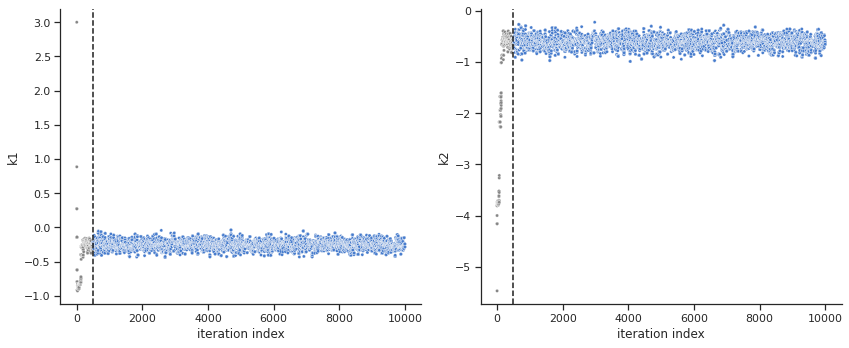

[10]:

ax = visualize.sampling_parameters_trace(res, use_problem_bounds=False, size=(12,5))

Burn in index not found in the results, the full chain will be shown.

You may want to use, e.g., 'pypesto.sample.geweke_test'.

By visual inspection one can see that the chain is already converged from the start. This is already showing the benefit of initiating the chain at the optimal parameter vector. However, this may not be always the case.

[11]:

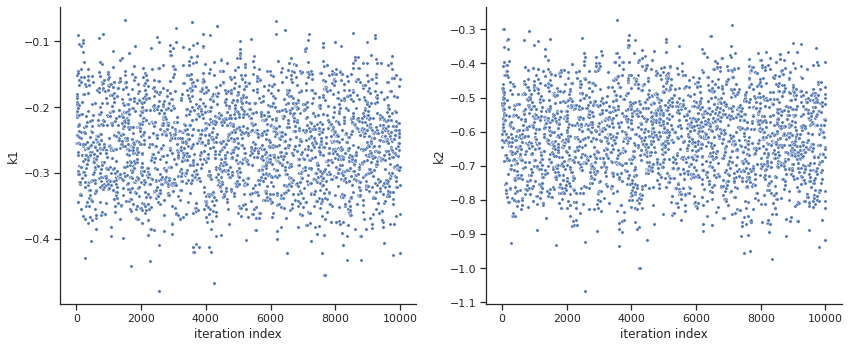

sample.geweke_test(result=res)

ax = visualize.sampling_parameters_trace(res, use_problem_bounds=False, size=(12,5))

Geweke burn-in index: 0

[12]:

sample.effective_sample_size(result=res)

ess = res.sample_result.effective_sample_size

print(f'Effective sample size per computation time: {round(ess/elapsed_time,2)}')

Estimated chain autocorrelation: 7.9935891381126964

Estimated effective sample size: 1112.0143300318373

Effective sample size per computation time: 44.27

[ ]: